Your Team's Corrections,

Promoted to Standards

MindMeld detects your team's corrections, promotes them to standards, and injects only the 10 that matter for each session.

No docs to write. No rules files to maintain. Standards that earn their authority through usage.

How It Works

Your team already corrects AI output every day. MindMeld captures those corrections and promotes the patterns that stick.

Static Rules (Everyone Else)

Those corrections are wasted—nobody captures them.

Correction-to-Standard Pipeline

Every correction makes the system smarter. Permanently.

The Standards Maturity Model

Standards aren't born—they're earned. Every correction moves a pattern through a lifecycle that no static rules file can replicate.

Provisional

Pattern detected from corrections. Soft suggestion injected.

3+ corrections detected

"We noticed your team prefers X"

Solidified

Validated across multiple developers. Strong recommendation.

85%+ team adoption

"Team consensus: always do X"

Reinforced

Battle-tested invariant. Violations flagged automatically.

97%+ compliance

"This breaks team standard X"

Standards are measured, not mandated.

Maturity is earned through real usage data—not declared by someone writing a wiki page.

Unused standards get demoted automatically. Your team stays in control of what sticks.

See It In Action

This is what happens when you start a coding session with MindMeld installed.

MindMeld is opinionated. It picks the 10 standards that matter for THIS session and ignores the rest.

If a standard never gets used, it gets demoted automatically.

The Context Window Problem

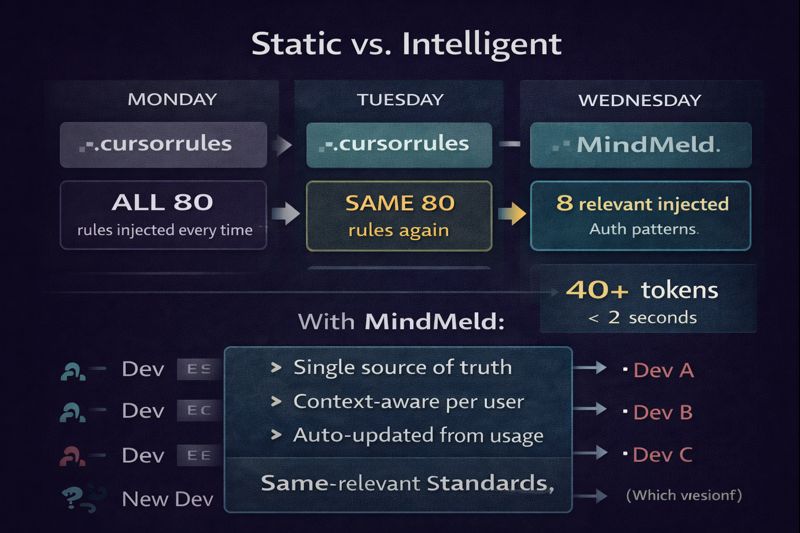

Static rules files dump everything into every session. Most of it is irrelevant to what you're working on today.

Static Rules (.cursorrules, CLAUDE.md)

- All 80+ rules injected every session

- Same rules for auth work and UI work

- Grows forever, nobody maintains it

- Wastes thousands of context tokens

Intelligent Injection (MindMeld)

- Top 10 relevant standards per session

- Context-aware: knows what you're touching

- Auto-curates based on usage data

- Saves context for actual coding

Works Where Context Is Precious

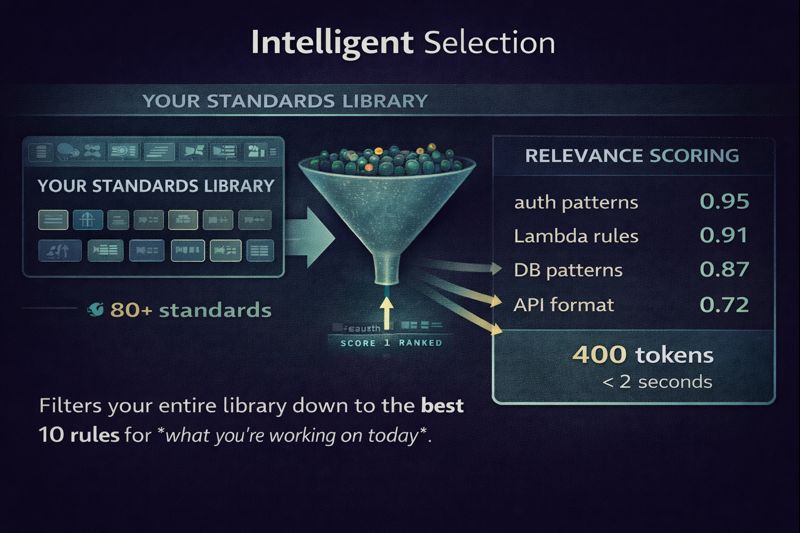

Cloud models give you 200k tokens. Local models give you 8-64k. Either way, dumping 3,200 tokens of static rules is wasteful. MindMeld's 400-token injection means your standards work everywhere—even on a laptop running Ollama.

Intelligent Selection

Your entire standards library scored by relevance. Only the best matches injected into each session.

File Context

Knows which files you're editing and matches relevant patterns.

Usage Data

Standards your team actually follows get promoted. Ignored ones get demoted.

<2 Seconds

Relevance scoring and injection happens in under 2 seconds at session start.

Static vs. Intelligent

Different work needs different rules. Static files don't know the difference.

Team Divergence

Without a single source of truth, every developer's rules file drifts. New hires don't know which version to trust.

New Hires Hit the Ground Running

Your accumulated knowledge becomes their starting point. Day one productivity, not month three.

😰 Traditional Onboarding

- Week 1: "Where's the documentation?"

- Week 2: "Why do we do it this way?"

- Week 4: "Oh, nobody told me that"

- Month 2: Still asking basic questions

- Month 3: Finally productive

🚀 With MindMeld

- Day 1: AI knows all team patterns

- Day 1: Tribal knowledge is injected

- Day 1: Standards enforced automatically

- Week 1: Shipping code that fits

- Week 2: Fully productive

You can grow juniors again.

When AI inherits your team's accumulated wisdom, junior developers produce senior-quality work. Not because they're seniors—because your knowledge compounds.

Juniors learn YOUR patterns, not Stack Overflow patterns

The Hidden Cost of AI Productivity

Anthropic's own research reveals what happens when AI replaces human collaboration.

"Claude is replacing colleague consultations as the first stop for questions."

Great for speed. Terrible for knowledge transfer. When seniors stop explaining things to juniors because AI is faster, institutional knowledge stops accumulating. When that senior leaves, the knowledge leaves too.

MindMeld is pair programming where everyone stays on the keyboard. Your patterns teach through every AI session.

Read Anthropic's Research →How MindMeld Differs

Every approach to AI coding standards has a fundamental limitation. MindMeld solves all three.

Static Rules Files

CLAUDE.md, .cursorrules, etc.

- No context awareness

- No maturity lifecycle

- Go stale immediately

- No team convergence

You maintain them. They never learn.

Vector DB / RAG Tools

Retrieval-augmented generation

- Retrieves, doesn't curate

- No standards-specific maturity

- Can't distinguish stale from current

- No correction detection

Good for search. Useless for standards.

MindMeld

Correction-to-standard pipeline

- Context-aware injection

- Maturity lifecycle (provisional → reinforced)

- Learns from corrections automatically

- Team convergence measured

Standards that earn their authority.

Built-in Eval, Not Bolted-on

Enterprise buyers ask three questions. MindMeld answers them with data that's inherent to the system—not a separate dashboard you have to build.

"Show me accuracy"

Every standard carries its own adoption metric. Provisional = 60% follow it. Solidified = 85%. Reinforced = 97%. No separate eval framework needed.

Standards followed % = accuracy

"What about edge cases?"

Standards that don't generalize get demoted automatically. If your team stops following a rule, it loses authority. Edge cases are handled by the lifecycle itself.

Demotion rate = robustness signal

"Prove it with real data"

The system learns FROM real usage—your team's actual coding sessions, not synthetic benchmarks. Proof is built into the mechanism. Every metric comes from production work.

Promotion velocity = learning rate

You don't need a separate eval framework.

Adoption metrics ARE the eval. When 97% of your team follows a standard without being told to, that's not a rule—it's a proven practice.

Tested Across 8 Local Models

Same task. Same 400 tokens of injected standards. Every model scored on 6 Lambda best practices.

| Model | Baseline | + MindMeld | Gain |

|---|---|---|---|

| codellama (7B) | 1/6 | 2/6 | +1 |

| devstral (14B) | 1/6 | 5/6 | +4 |

| llama3.2 (3B) | 1/6 | 4/6 | +3 |

| mistral (7B) | 3/6 | 3/6 | +0 |

| phi3:medium (14B) | 2/6 | 4/6 | +2 |

| qwen2.5 (14B) | 2/6 | 5/6 | +3 |

| qwen3-coder (30B) | 2/6 | 5/6 | +3 |

| starcoder2 (15B) | 2/6 | 4/6 | +2 |

models improved

avg gain from 400 tokens

models got worse

Task: Write a Lambda + PostgreSQL handler. Scored on 6 best practices (no pools, cached client, env vars, wrapHandler, no client.end, SSM resolve).

Reproducible demo: mindmeld-injection-demo.sh --all

<2s

Relevance scoring + injection

80+

Standards in the library

10

Relevant standards per session

5+

AI tools supported

Intelligent, not exhaustive. Your team's context window is for coding, not reading irrelevant rules.

Get started in minutes

Replace your static rules file with intelligent standards injection.

Why Teams Choose MindMeld

Intelligent standards management that scales with your project and team.

Relevance Scoring

Every standard scored against your current session context. Only the highest-relevance rules injected—never the full dump.

Standards That Harden

Corrections become suggestions, then hard constraints. Provisional → Solidified → Reinforced. Knowledge that compounds.

Auto-Curation

Usage data shows which standards are followed vs. ignored. Stale rules demoted automatically. No maintenance required.

Cross-Platform

Same standards across Claude Code, Cursor, Codex, and Windsurf. Your team uses what they prefer—rules stay consistent.

Single Source of Truth

No more divergent rules files per developer. One centrally managed standards library, context-aware per user.

Scales With You

10 standards or 200—MindMeld handles the complexity. Add compliance, AWS, or custom packs without bloating context.

Simple Pricing

Start free. Scale as your team grows. Contributing saves $50/month at every tier.

Code FOUNDER80 auto-applied at checkout. Limited time offer.

Anonymized standard categories (not code, not prompts) contribute to community packs like "AWS Lambda Best Practices." You approve each contribution.

Solo

Session memory only

- Project/Object session memory

- 1 participant, 3 projects

- Create your own standards

- Local context persistence

- Cross-platform sync

Pro Solo

Intelligent injection + community standards

Founding member rate - first 12 months

- Context-aware injection

- Relevance scoring

- 5 participants, 5 projects

- Shared team standards

- Cross-platform sync

Pro

Full library + control

Founding member rate - first 12 months

- Standards picker (per-user control)

- Compliance + AWS standards

- 10 participants, unlimited projects

- Analytics dashboard

- All Pro Solo features

Need 25+ seats?

Enterprise includes SSO, executive reports, knowledge capture, and onboarding acceleration.

See Enterprise Plans

Founding member pricing: 80% off for 12 months. After Year 1, prices return to standard rates.

Contributing mode saves an additional $10/month and helps build the community standards.